An interesting public conversation has grown out of recent developments in artificial intelligence (AI), with both optimistic and pessimistic perspectives gaining a fair amount of media attention. Will widespread general AI be disastrous (as Stephen Hawking, Elon Musk and Bill Gates have pointed out in recent years), or will it deliver an unprecedented supply of super-intelligent agents to solve practically all problems facing humanity, as suggested by Ray Kurzweil and many others?

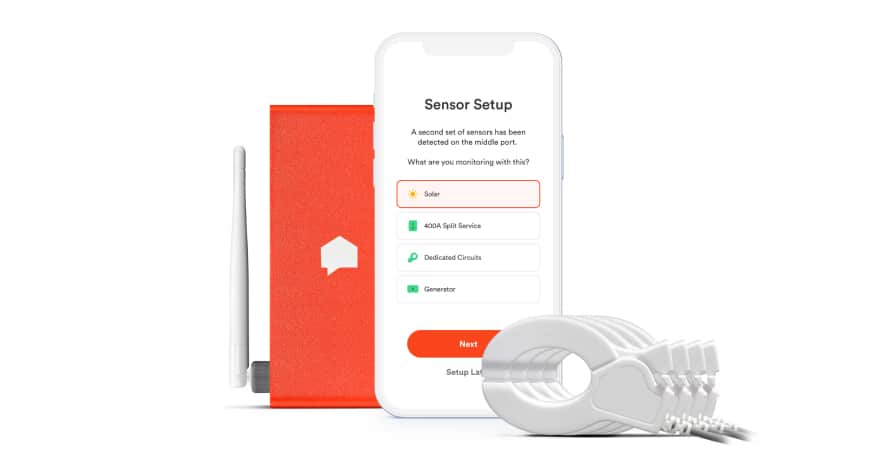

At Sense, we believe that the impact of AI for the foreseeable future will be quite different from either of these extremes. The continued development of well-engineered, domain-specific AI systems will provide us with tools to address important issues. Millions of people already use domain-specific AI systems on a daily basis to perform speech recognition, have their credit-card transactions verified or find good prices on-line. Such systems are also an important tool in enabling Sense to detect and categorize individual devices in a home just from their electrical signatures.

The far more difficult problem of creating “artificial general intelligence,” which can nimbly adapt itself to any task domain, remains as elusive as the Holy Grail.

Optimistic Commentators Think AlphaGo is the AI Holy Grail

The AlphaGo system’s recent victory over Lee Sedol, one of the strongest current players of the ancient board game Go, provides us with an interesting concrete case to discuss these issues. As the following quotes indicate, many sophisticated observers have suggested that this victory announces a new era, in which AI systems rapidly approach (or even exceed) human capabilities in a wide range of tasks requiring intuition or intelligence--in direct contrast to the more conservative expectations that we at Sense predict for the foreseeable future:

"At this point it is not clear whether there is any limitation to the improvement AlphaGo is capable of. (If only the same could be said of our old-fashioned brains.) It may be that this constitutes the beating heart of any intelligent system, the Holy Grail that researchers are pursuing—general artificial intelligence, rivaling human intelligence in its power and flexibility."

--Christof Koch, “How the Computer Beat the Go Master”

… Zoubin Ghahramani, of the University of Cambridge, said:

"This is certainly a major breakthrough for AI, with wider implications.”

--BBC, "Google achieves AI 'breakthrough' by beating Go champion."

"Because of this versatility, I see AlphaGo not as a revolutionary breakthrough in itself, but rather as the leading edge of an extremely important development: the ability to build systems that can capture intuition and learn to recognize patterns."

-- Michael Nielsen, "Is AlphaGo Really Such A Big Deal?"

There are a few reasons for these optimistic conclusions:

First, earlier attempts to develop automated Go players have been singularly unsuccessful. This produced a widespread opinion that Go can really only be mastered by developing the same type of intuitive grasp that allows human creativity to span innumerable domains.

Second, the developers of AlphaGo reported that the system experienced a spurt of improvement based on intensive playing against itself in the months leading to the eventual victory over Lee Sedol. Such self-improvement suggests that any limit, including human-level intelligence, is within reach given enough time and computer power. That is to say, Go is only the beginning.

Third, AlphaGo utilizes Deep Learning—an approach to pattern recognition that has achieved notable successes in applications as varied as self-driving cars, image recognition and speech recognition. Therefore, many observers have the impression that Deep Learning could be the key component driving intelligence across an unlimited range of applications.

From these arguments, it is easy to think that Google researchers have uncovered the principles of human-like Artificial General Intelligence, and that the only barriers to matching human intelligence on any given task are engineering skills and data. As I'll discuss below, I do not think this is the case.

Does Deep Learning Live Up to the Hype?

How much innovation do these developments really represent? The honest answer is “less than meets the eye.” Improvement from self-play, for example, has been around for at least six decades. (It was utilized by Arthur Samuel in his ground-breaking checkers program during the 1950s.) And the conceptual basis for Deep Learning arguably contains nothing that was not known 30 years ago. One apparently novel component that accompanied early Deep Learning was the Restricted Boltzmann Machine (RBM), which seemed to provide such learning with a depth of layering previously unachievable. However, subsequent research has rendered the RBM superfluous, and state-of-the art Deep Learning now relies on the same principles as the “backpropagation” systems of yesteryear (though with much more data, and an increased bag of heuristic tricks to make computation feasible). All this is to say, Deep Learning isn’t as new and groundbreaking as the breathlessly excited commentary may lead you to believe.

In fact, the most important conceptual progress leading to AlphaGo’s victory was the realization that Monte Carlo tree search could be used to overcome the fundamental difficulty that had prevented chess-like software from achieving respectable performance in Go. This type of narrow, domain-specific insight has been important in several of the prototypical successes of AI, but doesn’t measure up to human intelligence’s cross-domain creativity. Such intelligence has several crucial characteristics (including one-shot learning, learning from learning, utilization of diverse information sources, binding of properties to specific objects) which are distinctly absent in Deep Learning solutions. Basically, the breakthrough that allowed a computer to successfully play Go didn’t use the broader type of learning that human brains can use, so the “Holy Grail” of AI is still out of reach.

From this perspective, AlphaGo was successful for exactly the same reasons that numerous specialized pattern-recognition algorithms have succeeded in the past two to three decades: careful feature design, a large amount of training data and clever engineering combine to make a previously intractable problem manageable.

This is the same approach we are taking to load disaggregation at Sense: whether using Deep Learning, Hidden Markov Models, or some other framework for learning, we invariably find that sufficient accuracy is only achieved once we apply a large amount of domain knowledge to the task at hand. Of course, the folks at Google have no illusions about this matter, as this fragment from a recent blog regarding the AlphaGo triumph shows:

Deep neural networks are already used at Google for specific tasks—like image recognition, speech recognition, and Search ranking. However, we’re still a long way from a machine that can learn to flexibly perform the full range of intellectual tasks a human can—the hallmark of true artificial general intelligence.

The AlphaGo team certainly succeeded where many had failed previously, and in doing so they overturned some strongly-held beliefs. Should we conclude that the barrier to general AI has been breached, or that the game of Go was, in fact, not such a good test for general intelligence? Based on current evidence, it seems that the latter statement is much more likely.